In a previous blog post, I mentioned why regularly shrinking your databases is not the right way to reclaim space. I also mentioned at the end of the blog post that the proper way to deal with database space issues is to monitor your disk space utilization and do proper capacity planning. That’s where tools like SentryOne (now part of SolarWinds), System Center Operations Manager (OpsMgr,) Idera, SolarWinds, Quest Software (now a part of Dell,) etc. come into the picture. These monitoring tools not just check for the health of your databases but they also collect operational data such as CPU, memory and disk space utilization.

But, what if you don’t have those monitoring tools yet? Where do I get the operational data for my database disk capacity? I do have the current size of the databases but that wouldn’t be enough to do capacity planning and forecasts. How else can I perform capacity planning for my storage requirements?

Backups Are More Than Just For Disaster Recovery

I ended the previous blog post with a question: “do you have database backups?” If you do (and I sure hope so,) you’re in luck. I bet that you also have a place where you store those database backups for archiving such as a tape, file server or even a backup server. As a high availability and disaster recovery expert, I used to think that backups are merely for the “ooopppss” moments that happen every once in a while. In fact, that’s how I sell the importance of backups: they’re an insurance policy. But a couple of years ago, I’ve learned that backups are more than just for disaster recovery. One of my customers asked me to recommend a hardware configuration for upgrading their existing database server. One of their main concerns is storage capacity and they wanted to plan and budget for at least two years worth of storage. Since they don’t have a monitoring tool in place, I started to look elsewhere to find the operational data that I need. And, guess where I found them? That’s right, on the backup files.

Using Backups For Storage Capacity Planning (and even with Availability Groups)

Since full backups in a SQL Server database contain all of the data and enough transaction log records to recover the database, the backup size is a good measurement of the required disk space. If you have your backups, you can check the file sizes of all the previous full backups that you have. The only challenge is if you only keep a day’s worth of backup files. That’s no different from checking the current size of your database with a little bit of free space added. There are a couple of places to look for the historical data about your backups.

- – Backup server. If you are using an enterprise backup tool, it has it’s own data collection utility specifically for backups. This includes the name of the server where the backup is from, the date/time when the backup was taken, the size of the backup file, duration, etc. This is good enough information to use for storage capacity planning.

- – msdb database in SQL Server. Every database backup you take in SQL Server will have a metadata. That metadata is stored in the msdb database. That’s where SQL Server Management Studio takes the information to tell you what backups were taken previously.

This is certainly not SQL Server Management Studio. But it’s good for illustration purposes.

You can use the query below to retrieve a list of all the full database backups taken for a specific database, the size and the date/time when they were taken.

SELECT backup_start_date, backup_size/1073741824 AS backup_size_GB

FROM msdb.dbo.backupset a INNER JOIN msdb.dbo.backupmediafamily b

ON a.media_set_id = b.media_set_id

WHERE TYPE='D' and database_name='sampleTestDB'

Even if you only have a month’s worth of backup history information, it will be good enough to get you started. This is where we’ll need Microsoft Excel. Here’s how you can use Excel with your backup history information to use for storage capacity planning:

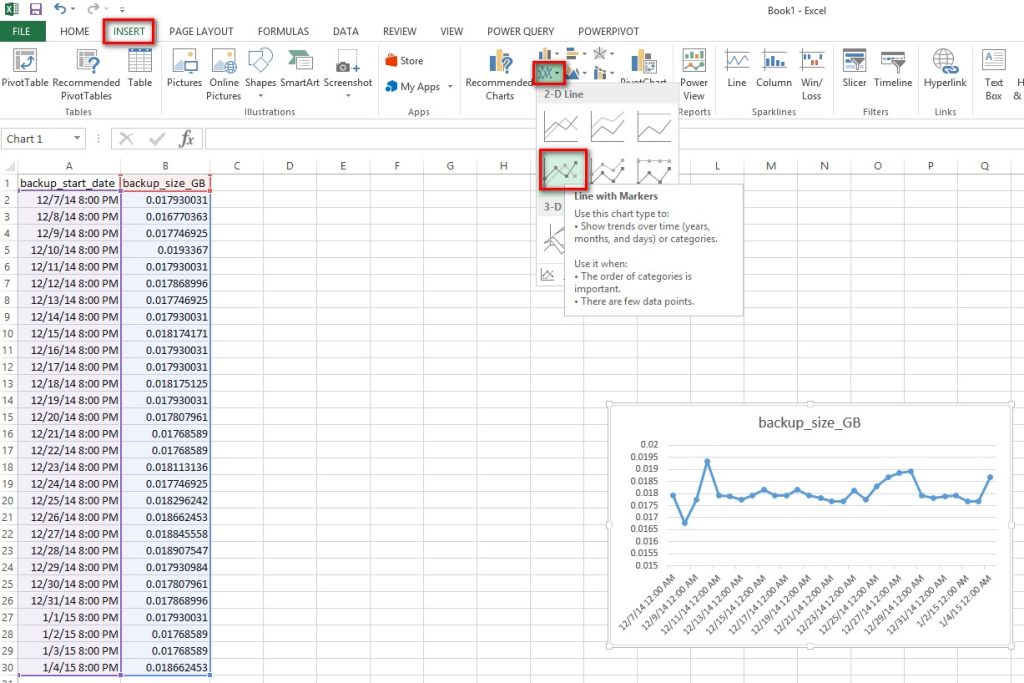

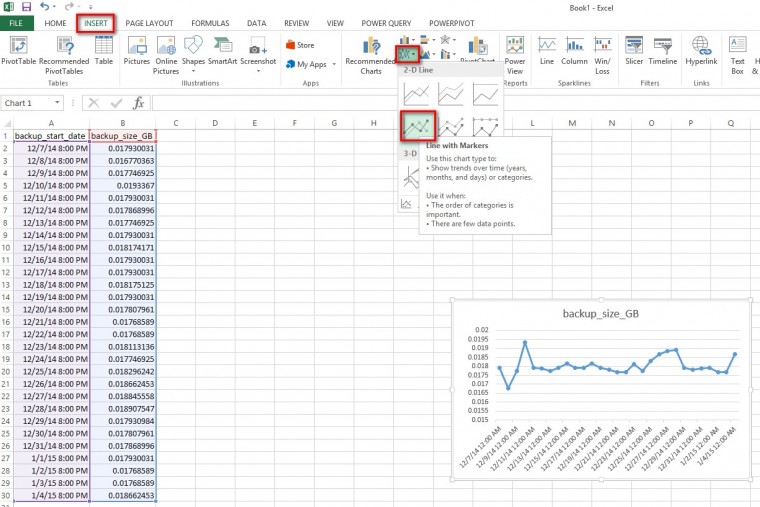

- 1. Enter your backup history information into an Excel spreadsheet. This is the easy part. You can just copy-and-paste the results of the query provided from SQL Server Management Studio to Excel

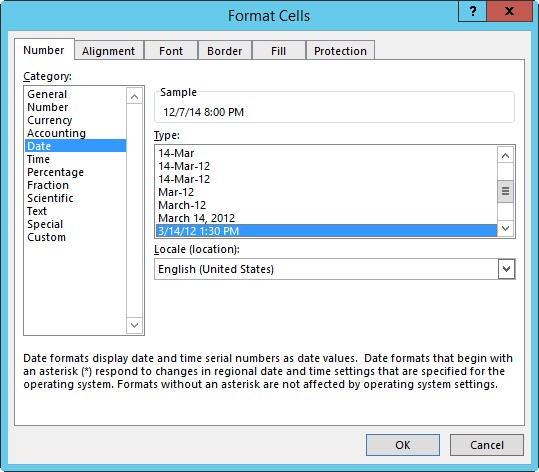

- 2. Format the data to use the appropriate data type. Sometimes, Excel does not use the appropriate data type for the copied data. Make sure that you format the data to use the appropriate data type. This will be helpful when we go to the next step.

- 3. Create a line graph based on the data. Select the data range in the Excel spreadsheet, click the Insert tab and select a line graph.

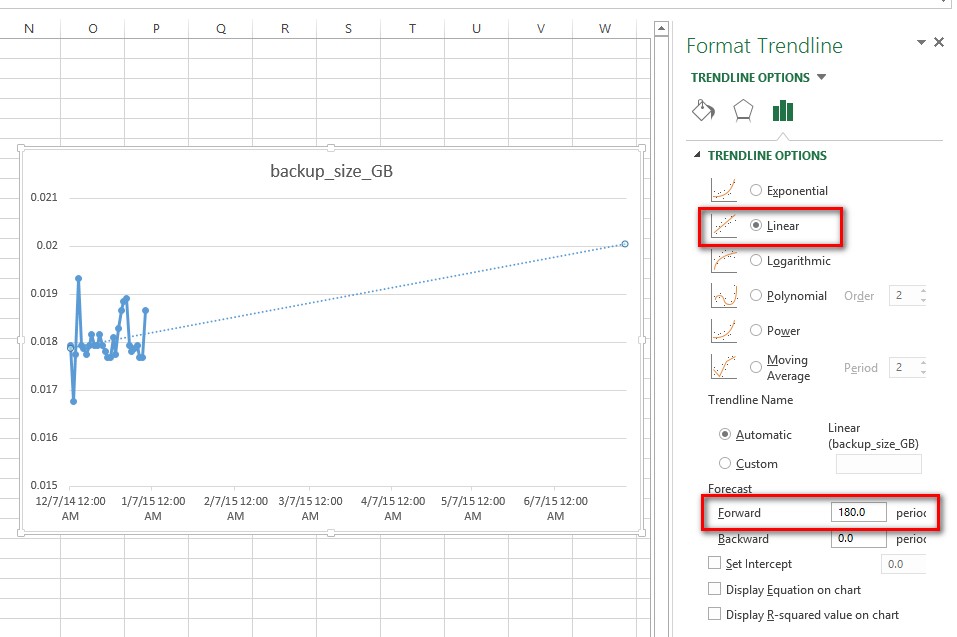

- 4. Create a trendline from the given line graph. Once you have the line graph, right-click anywhere near the data points and select Add Trendline. We will add a linear trend line to forecast the database backup size for the next 6 months.

We now have a crude way of doing linear regression analysis of our storage capacity requirement for a specific database. All we have to do now is do this for all of our databases, forecast data for a specific period, sum it all up and multiply by a factor of safety value – say twice the total storage requirement. Now, I don’t want to claim expertise in statistical analysis especially since I almost failed my statistics course in college. But this is a relatively easy way to forecast disk capacity requirements if you don’t have monitoring in place.

There’s a lot of things we can do with Excel. In fact, I did this exact same thing with another customer who requested for a storage capacity requirement for a three (3) replica Availability Group. Since the storage requirement for an Availability Group replica is the same as the primary replica, it’s safe to just multiply the storage requirements with the number of replicas.

Let me know what else you come up with in your day-to-day tasks managing SQL Server databases with Excel.

Additional Resources

- – Add, change, or remove a trendline in an Excel chart

- – Backup History and Header Information (SQL Server)

This is quite ingenious 🙂

Just making the most of what one has and using it cleverly. thanks.

Sometimes, it takes a different perspective to make the most out of what we have. Since we already have the backup history information, we might as well use it for something like storage capacity forecasting.

Thanks for reading my blog.

Excellent tip Edwin!!! Great work!

Thanks for reading my blog. Will you be using this information anytime soon?

Hi Edwin, love this article. I actually have used backup info in the past for capacity forecasting database sizes. In my case the requirement was for data, log and native backups (which were sitting on a windows drive). Also, using the full backup sizes and combined it with the existing data file property, current space available on that drive I was able to provide an approximate period where the drive will be full so the client can prepare in advance. As far as the log files size future projection, I have done similar work but instead of the using full backup info, I reviewed the growth rate of the largest normal tlog backup file that occurs in between full/diff backups along with the log file property. Was a lot of manual work though and it wouldn’t be possible without a good backup history available in msdb.

Hi Daylinda,

Thanks for reading my blog. Those are really smart ways to use the backup history information in msdb. Besides, these are forecasts – a.k.a intelligent guesses. Backed by data that we have, we are making intelligent guesses of how much disk capacity we need in the future. That way, when we request for additional capacity in the future, we can justify it with supporting data.

I just tried this and it works like a charm! Thanks Edwin!

That’s great to hear. Now you know how BI professionals get started to get insights and become data analysts 🙂